Gesturing Toward Abstraction

Multimodal Convention Formation in Collaborative Physical Tasks

Kiyosu Maeda, William P. McCarthy, Ching-Yi Tsai, Jeffrey Mu, Haoliang Wang, Robert D. Hawkins, Judith E. Fan, Parastoo Abtahi

ACM CHI'26 PAPER | 13 APRIL 2026

How do people use gestures and language to establish conventions and adapt communication over repeated physical collaboration?

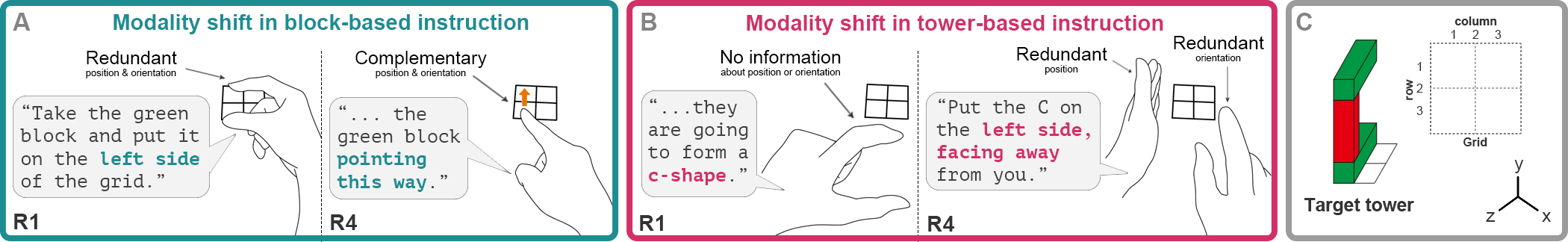

This works investigates how communication strategies evolve through repeated collaboration as people coordinate on shared procedural abstractions. We first conducted an online unimodal study (n = 98) using natural language to probe abstraction hierarchies. Then, in a follow-up lab study (n = 40), we examined how multimodal communication (speech and gestures) changed during physical collaboration. Based on the findings, we extend probabilistic models of convention formation to multimodal settings, capturing shifts in modality preferences.

Note

See the official project website here, where you can find dataset, codes for both unimodal and multimodal study, and the app we build for viewing our data and experiment sessions.

I work with Kiyosu in PSI lab for the project, helping him perform experiments, coding data, and analysis to distill insights.